Contributed by weerd on from the redundant-department-of-redundancy dept.

It's been some weeks since the Networking hackathon, n2k8 in Japan, of which we've seen lots of great coverage already. One of the less obvious changes from n2k8 was that work was started on a very cool new feature for pf(4) and relayd(8), namely Direct Server Return (DSR). During the General Hackathon, c2k8, in Edmonton a lot of work was done on DSR which means that OpenBSD load balancers can now be used in this specific setup too (of course, with both IPv4 and IPv6 support).

Below we'll look at what DSR is in a bit more detail, ask one of the main developers some questions about it and configure a simple setup that does DSR for IPv4 and IPv6.

Load balancing is often used to distribute load over multiple resources (cpu, ram, disk, network, etc.) and to gain increased reliability because of the redundancy introduced with multiple servers (which also simplifies the process of upgrades). In most cases the service is handled by a Virtual IP (VIP) on a load balancer which then NATs requests to backend servers. The replies travel from the server, via the load balancer, back to the client. However, quite often there is substantially more return traffic than incoming requests. When serving large files over HTTP, it is not uncommon to see 10 Mbit or less of incoming queries for 100Mbit of return traffic.

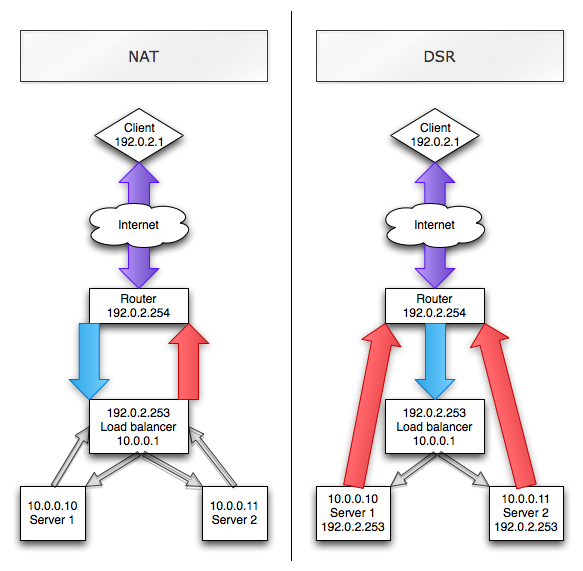

Since all return traffic has to flow through the load balancer, it soon becomes the bottleneck of your load balanced cluster. Enter DSR. With DSR, the server replies to the client by itself (hence the term "Direct Server Return"), so that the load balancer does not have to forward all traffic back to the client. The following image illustrates the difference between NAT and DSR based load balancing :

As you can see, the client (192.0.2.1) connects to the Load Balancer (LB) on its VIP (192.0.2.253). In the NAT case, the LB NATs the connection through to Server 1 or Server 2. The servers have the LB as their default gateway so replies go out through the LB back to the client. IP-wise, client sees 192.0.2.1:1024 -> 192.0.2.253:80, LB sees 192.0.2.1:1024 -> 192.0.2.253:80 -> 10.0.0.10:80 and the server sees 192.0.2.1:1024 -> 10.0.0.10:80. Now in the case of DSR, the LB does not NAT the connection on the IP layer but forwards the packet to either server virtually untouched. In this case, client, LB and server all see 192.0.2.1:1024 -> 192.0.2.253:80. Of course, the server needs to accept traffic for the VIP, so this should be configured on a loopback interface (not on a real interface, you don't want ARPs for the VIP to confuse the router). Now when the server replies, traffic goes directly from the server through the router to the client, bypassing the LB completely.

As you can imagine, full DSR support requires quite a bit of work. Reyk Flöter (reyk@) added the initial bits of DSR support to relayd(8) during n2k8. Reyk, in the commit message, mentions that this change was thought about in the onsen :

CVSROOT: /cvs

Module name: src

Changes by: reyk@cvs.openbsd.org 2008/05/06 19:49:29

Modified files:

usr.sbin/relayd: parse.y pfe_filter.c relayd.8 relayd.conf.5

relayd.h

Log message:

add an alternative "route to" mode to relayd redirections which maps

to pf route-to instead of the default rdr. it is a first steps towards

support for "direct server return" (dsr), an asynchronous mode where

the load balanced servers send the replies to a different gateway like

a l3 switch/router to handle higher amounts of return traffic.

because the state handling in pf isn't optimal for this case yet, it

just sees half of the TCP connection, the sessions are forced to time

out after fixed number of seconds.

discussed with many, thought about in the onsen

As you can see, in the case of DSR and pf(4), pf on the load balancer only sees one half of the TCP session (more on this later), so it is impossible to enforce some of the strict requirements currently provided by state checking. What is needed, is some way for pf to track a TCP state with less information :

CVSROOT: /cvs

Module name: src

Changes by: henning@cvs.openbsd.org 2008/06/09 22:24:17

Modified files:

sys/net : if_pfsync.c pf.c pf_ioctl.c pfvar.h

Log message:

implement a sloppy tcpstate tracker which does not look at sequence

numbers at all. scary consequences; only tobe used in very specific

situations where you don't see all packets of a connection, e. g.

asymmetric routing. ok ryan reyk theo

Of course, sloppy state tracking can be used for other (assymmetric) situations too, but it is definitely required for DSR situations. Note that sloppy states are more easily attacked (read pf.conf(5) for more details), so you'll need to make sure traffic is sane elsewhere.

With the developers currently heading back home from an intense week of hacking, we now have a fully working DSR implementation in the base OpenBSD install. I asked Reyk about how he got to work on DSR and on any plans for future development. Reyk answers while waiting for his flight back to Germany :

weerd: When and why did you start work on DSR ?

reyk: Pyr@ [Pierre-Yves Ritschard] and me were thinking about implementing DSR for some time. It was somewhere on our TODO list but with a fairly low priority. We had crazy ideas to implement it and all of them were just too complicated so we never started working on it. But a few days before the n2k8 hackathon in Japan, which I really enjoyed, I had the idea to just use pf(4) route-to. Isn't it obvious? So I started doing some initial tests to verify that it works just before n2k8 and implemented it for relayd during the network hackathon.

weerd: So far, we've seen changes to pf and relayd. What other parts (if any) of the system did you have to change for DSR support ?

reyk: DSR is complete, it does not need any other changes than the ones that I've done for relayd and finally the "sloppy" state tracking in pf. From a network administrator perspective, you have to update your pf.conf to include an additional anchor to allow to insert the route-to filter rules, so add 'anchor "relayd/*"' in the filter section in addition to the 'rdr-anchor "relayd/*"' for translation rules. The configuration in relayd.conf is quite simple and it normally also requires to bind the relay's external IP address to a loopback device on each loadbalanced host.

weerd: So DSR is completely finished, nothing else needs to be done ?

reyk: Some testing needs to be done, of course. I will do some stress testing when I finally returned to Germany and hook it up to a 10G ProCurve switch and some fast machines. But it is much better if I get good feedback from the OpenBSD community about using DSR in different and maybe exotic setups. Does it work for you? Maybe I need to improve the documentation to give some better examples about how to configure DSR on the relayd box and the load balanced hosts.

weerd: Can you tell us something about the implementation details ?

reyk: During n2k8 I implemented DSR with a limitation that all sessions had a fixed and absolute timeout, 10 minutes by default. The sessions couldn't run longer than this and they did not time out before, because I basically had to bump up the tcp.opening timeout. pf's sophisticated stateful filtering does not allow to handle DSR TCP connections where you only see the requesting half of the stream, but I do need to keep a state to remember the target host from the pool of load balanced hosts.

I talked to henning@ [Henning Brauer] about a way to add an optional mode to pf(4) where it does a loose state handling. A mode that is enough to create and track a pf TCP state, recognize RSTs and FINs, and that works with half streams and async routing. Something that is horribly insecure and should never be used on real firewalls and if you don't know what you're doing. We initially didn't find a name for it so we talked about "loose state", "contrack", "iptables/netfilter mode", and finally "keep state (sloppy)".

Henning finished sloppy mode during c2k8 and I was able to update relayd to use this mode instead of the workaround with a fixed timeout that I used before. I did cosmetic adjustments to pf's sloppy state handling to detect half TCP connections on the fly - I made it even sloppier to work with DSR.

weerd: What are future plans for DSR and / or relayd ?

reyk: The future is already here - I implemented another nice thing in relayd during c2k8 that uses markus@' BINDANY and divert-reply extensions to allow fully-transparent relay sessions. It allows to run layer 7 relays to connect to the target host with the original client's IP address from the other side, it kind of obsoletes the need for X-Forwarded-For HTTP headers in some scenarios and allows fancy things like fully-transparent SSL encapsulation. But this is another story...

weerd: Any finishing comments on DSR ?

reyk: Don't use it. It is something that is required to handle really high amounts of bandwidth, like streams, but it totally negates the security benefits of real load balancing with pf redirections and the extra security in our packet filter. So unless you really need it, you should get a faster box and run the traditional mode instead.

There is nothing comparable to the stateful filtering in pf, just the buzzword "SPI" (stateful packet inspection) doesn't say anything about the quality of the checks. The new sloppy mode is so scary and useable at the same time, but it is even worse that other firewalls can't do much more than our sloppy mode.

weerd: Thank you Reyk for your time in answering these questions and your work on OpenBSD in general and DSR in particular.

Let's have a look at how to configure DSR with OpenBSD. We'll create the situation depicted in the image above, all systems will be running OpenBSD (router, load balancer and servers). We'll configure our service on both IPv4 and IPv6 of course. In this setup I'm using a set of ALIX 2c3 machines which have vr(4) interfaces.

- Client configuration :

- New /etc/hostname.vr0 :

inet 192.0.2.1 255.255.255.128 192.0.2.127 inet6 3ffe::1 64

- New /etc/mygate (the Router in our example) :

192.0.2.2 3ffe::

- New /etc/hostname.vr0 :

- Router configuration :

- New /etc/hostname.vr0 (network to loadbalancer and webservers) :

inet 192.0.2.254 255.255.255.128 192.0.2.255 inet alias 10.0.0.2 255.255.255.0 10.0.0.255 inet6 3ffe:1:: 64 inet6 alias 3ffe:2:: 64

- New /etc/hostname.vr1 (network to client) :

inet 192.0.2.2 255.255.255.128 192.0.2.127 inet6 3ffe:: 64

- Configure the router to forward IPv4 and IPv6 traffic :

echo 'net.inet.ip.forwarding=1' >> /etc/sysctl.conf echo 'net.inet6.ip6.forwarding=1' >> /etc/sysctl.conf

- New /etc/hostname.vr0 (network to loadbalancer and webservers) :

- Load balancer configuration :

- New /etc/hostname.vr0 :

inet 192.0.2.253 255.255.255.128 192.0.2.255 inet alias 10.0.0.1 255.255.255.0 10.0.0.255 inet6 3ffe:1::1 64 inet6 alias 3ffe:2::1 64

- New /etc/mygate (point to the Router) :

192.0.2.254 3ffe:1::

- New /etc/pf.conf :

rdr-anchor "relayd/*" anchor "relayd/*"

- New /etc/relayd.conf :

webserver1="10.0.0.10" webserver2="10.0.0.11" v6server1="3ffe:2::10" v6server2="3ffe:2::11" vipaddress="192.0.2.253" v6address="3ffe:1::1" table <webservers> { $webserver1 $webserver2 } table <v6servers> { $v6server1 $v6server2 } redirect www { listen on $vipaddress port http interface vr0 route to <webservers> port www check http "/" code 200 interface vr0 } redirect wwwv6 { listen on $v6address port http interface vr0 route to <v6servers> port www check http "/" code 200 interface vr0 } - Enable relayd and pf on boot :

echo 'relayd_flags=""' >> /etc/rc.conf.local echo 'pf=YES' >> /etc/rc.conf.local

- New /etc/hostname.vr0 :

- Webserver configuration (x 2) :

- New /etc/hostname.vr0 (which configures the VIP address on lo0) :

inet 10.0.0.10 255.255.255.0 10.0.0.255 inet6 3ffe:2::10 64 !ifconfig lo0 alias 192.0.2.253/32 !ifconfig lo0 inet6 alias 3ffe:1::1/128

- New /etc/mygate (point to the Router) :

10.0.0.2 3ffe:2::

- Configure Apache to listen on both IPv4 and IPv6.

echo 'Listen 0.0.0.0 80' >> /var/www/conf/httpd.conf echo 'Listen :: 80' >> /var/www/conf/httpd.conf

- Configure Apache to start on boot with IPv6 support :

echo 'httpd_flags="-U"' >> /etc/rc.conf.local

- New /etc/hostname.vr0 (which configures the VIP address on lo0) :

That is basically all that's necessary for a (simplified but working) DSR setup. Running tcpdump(8) on the loadbalancer while doing a request from the client, you see traffic coming from the client (mostly ACKs and some FINs at the end of the request), but nothing going back (you do see all traffic twice though, once from Router to Load balancer and once from Load balancer to Webserver). Closer inspection on the router shows that traffic from the client to the service on the server network has source MAC address of the router and destination MAC address of the load balancer. Return traffic has a destination MAC address of the router but source MAC address of one of the two load balanced webservers.

Of course, this setup focusses mainly on DSR, but note that all components (including the router and the load balancer) can be made redundant using carp and pfsync, creating a completely redundant environment for serving just about anything you want. Also note that this is a very basic setup to demo the feature, there's no filtering at all. Please heed Reyk's warning and do not use this in production unless you really need to. That being said, if you do have a use for this sort of setup, please give the latest snapshot a spin and report success or failure back to the developers.

This is a very elegant solution adding to the growing list of cool features found in relayd and pf (and OpenBSD in general). Thanks again to Reyk and also to Henning and Pierre-Yves and the other developers involved.

(Comments are closed)

By Anonymous Coward (216.68.196.92) on

So when does OpenBSD put Cisco out of business?

Thanks to all the hard working developers. Hope the corporate world starts funding OpenBSD more.

Would love to buy the next edition of, The Book of PF, updated for all the upcoming work.

Peace.

Comments

By Anonymous Coward (24.37.242.64) on

Absolutely here too!

By Anonymous Coward (212.0.160.18) on

never :)

Comments

By Anonymous Coward (71.138.117.69) on

...no, that's when Cisco puts OpenBSD out of business. [smiley rampage here]

Comments

By Anonymous Coward (212.0.160.18) on

>

> ...no, that's when Cisco puts OpenBSD out of business. [smiley rampage here]

>

you don't have a clue, do you? (:

By don cipo (doncipo) doncipo@redhat.polarhome.com on

Comments

By Fiets (193.0.10.229) fiets@fietsie.nl on www.fietsie.nl

please delete this commit on sight.

By Anonymous Coward (2001:9b0:1:1017:a00:20ff:fefe:9a37) on

Or am I missing something? Do commercial LBs call this DSR? Or is this a case of "let's make up a new name and then everyone will think we did it first"?

Again. I think this is a great feature. Well done.

Comments

By Brad (2001:470:8802:3:216:41ff:fe17:6933) on

There hasn't been any new name made up. DSR is a standard term used by pretty much every vendor that deals with load balancing. In this case Linux developers have made up their own term.

> Or am I missing something? Do commercial LBs call this DSR? Or is this a case of "let's make up a new name and then everyone will think we did it first"?

Ask the Linux developers why they made up their own name.

Comments

By Anonymous Coward (81.83.46.237) on

Because now they can't have bugs in DSR ... ;o)

By Igor Sobrado (sobrado) sobrado@ on

>

> There hasn't been any new name made up. DSR is a standard term used by pretty much every vendor that deals with load balancing. In this case Linux developers have made up their own term.

In the same way Linux developers use the term masquerading instead of the industry standard (and more clear) NAT/PAT. Linux using a name for a feature does not make it the right term.

Comments

By René (91.42.55.117) on

Masquerading is not NAT, it is a special case of NAT. Only if it is Source-NAT with a dynamic IP-address it is called Masquerading in Linux iptables.

Comments

By Anonymous Coward (142.22.16.58) on

> > the industry standard (and more clear) NAT/PAT. Linux using a name

> > for a feature does not make it the right term.

>

> Masquerading is not NAT, it is a special case of NAT. Only if it is

> Source-NAT with a dynamic IP-address it is called Masquerading in Linux

> iptables.

In iptables, maybe. But they originated the term in ipchains, where all forms of NAT were called Masquerading.

By Anonymous Coward (62.4.77.94) on

Foundry calls it "direct server return", and they're one of the largest & oldest vendors of boxes that do this stuff...

By René (91.42.55.117) on http://openbsd.maroufi.net

What exactly is the difference between iptables stateful filtering and pf stateful filtering. Why is iptables stateful filtering insecure?

Comments

By Martin Toft (martintoft) on http://martintoft.dk

I think they're comparing pf to netfilter/iptables without the connection tracking module activated. In other words, stateful filtering is default in pf and with netfilter/iptables you need to use a module. If this is the case, the comparison is not fair, IMHO. If it's not the case, I would also like to know in which way netfilter/iptables' stateful filtering isn't as secure as pf's :-)

After all, I use netfilter/iptables on a few boxes, where I cannot dictate the choice of OS...

Comments

By Anonymous Coward (81.83.46.237) on

They talk about the new "sloppy mode" for loose state handling as being "horribly insecure and should never be used on real firewalls and if you don't know what you're doing". They also refer to it as "iptables/netfilter mode". So, my guess is that it has to do with how netfilter does it's state matching?

By henning (24.68.226.27) on

>

> I think they're comparing pf to netfilter/iptables without the connection tracking module activated.

no. linux stateful filtering / connection tracking is basically what the new "sloppy" state tracker does in openbsd (at least it was last time i checked...). scary. In pf, we do much more to ensure that a packet really belongs to the connection it seems to belong to - last not least, the sequence number tracking.

By Anonymous Coward (24.37.242.64) on

>

> What exactly is the difference between iptables stateful filtering and pf stateful filtering. Why is iptables stateful filtering insecure?

Correct me if I'm wrong, but isn't Linux's firewall package pseudo-stateful, in the sense that it relies on only reading the TCP flags rather than analyzing everything in depth and confirming that it's not a spoofed flag or such?

Comments

By René (91.42.55.117) on

>

> Correct me if I'm wrong, but isn't Linux's firewall package pseudo-stateful, in the sense that it relies on only reading the TCP flags rather than analyzing everything in depth and confirming that it's not a spoofed flag or such?

I don't know how this is really implemented, but there is a state modul for iptables (-m state). With this module you can have states for tcp and udp and udp has no really state or flags for a handshake.

By Ryan McBride (210.138.60.53) mcbride@openbsd.org on

Correct me if I'm wrong, but isn't Linux's firewall package pseudo-stateful, in the sense that it relies on only reading the TCP flags rather than analyzing everything in depth and confirming that it's not a spoofed flag or such?

I think part of the confusion is that netfilter.org's documentation is a horrible mess, for example the FAQ still lists the 2003 state of affairs, which required you to apply a kernel patch to get stateful filtering. It looks like that as been integrated for a few years now, and appears to have TCP state tracking that aims to follow the same Guido van Rooij paper as PF state tracking is based on. I've not taken the time to trace through the whole netfilter calling path, so it's not clear to me under which conditions this code is enabled; I'm guessing based on other comments in this thread that it's not enabled by default.

Also, based on comments in the code like the following, it seems that the implementors have taken a more open approach when dealing with some edge conditions:

/* "Be conservative in what you do, be liberal in what you accept from others." If it's non-zero, we mark only out of window RST segments as INVALID. */ static int nf_ct_tcp_be_liberal __read_mostly = 0;In PF we would take a much more aggressive approach here; this is no longer the Internet of 1983, and in general it's the firewalls function to NOT be liberal in what we accept from others.By Anonymous Coward (206.248.190.11) on

>

> What exactly is the difference between iptables stateful filtering and pf stateful filtering. Why is iptables stateful filtering insecure?

Because iptables "stateful" filtering doesn't check sequence numbers apart from the intial TCP handshake.

Comments

By Julien Mabillard (62.2.143.162) on

> >

> > What exactly is the difference between iptables stateful filtering and pf stateful filtering. Why is iptables stateful filtering insecure?

>

> Because iptables "stateful" filtering doesn't check sequence numbers apart from the intial TCP handshake.

Actually tcp state checking in pf is composed of several coherent checks.

it is not only flags and sequence number, but also window size coherence

and all this build a very solid control over stateful.

taking into account all relevant tcp properties.

Old but still interesting, have a look at:

http://www.madison-gurkha.com/publications/tcp_filtering/tcp_filtering.ps

By Krunch (91.106.245.207) on

> >

> > What exactly is the difference between iptables stateful filtering and pf stateful filtering. Why is iptables stateful filtering insecure?

>

> Because iptables "stateful" filtering doesn't check sequence numbers apart from the intial TCP handshake.

Actually, it looks like iptables _can_ do the same checks than pf's "keep state" but you have to specify everything yourself and adding -m state is not enough.

Beware I have very little experience with iptables (I can't really think of any reason to use something else than pf for firewalling) and this comment only based on IRC discussion and RTFM.

By Anonymous Coward (2001:738:0:401:21b:63ff:fe9f:4527) on

please support IPv6 in your DSR also. We are using pf on FreeBSD for IPv6 loadbalancing. It was hard to persuade Linux people that pf can do load-balancing. They very much fond of LVS solution. They might abandon Linux Virtual Server for IPv4 if they have this feature (In LVS it is called direct routing).

Comments

By Anonymous Coward (70.173.232.231) on

> please support IPv6 in your DSR also. We are using pf on FreeBSD for IPv6 loadbalancing. It was hard to persuade Linux people that pf can do load-balancing. They very much fond of LVS solution. They might abandon Linux Virtual Server for IPv4 if they have this feature (In LVS it is called direct routing).

>

>

from the first paragraph of this article "(of course, with both IPv4 and IPv6 support)". Also, as noted in earlier comments, everyone except Linux calls it DSR not DR.

By Rich (195.212.199.56) on

On the subject of the need to employ sloppy state tracking, would it be possible for the servers to pass back the full reply direct to the client, and at the same time pass back partial replies back to the load balancer (just packet header information maybe - that sort of thing), and then the load balancer could maybe plug this partial reply back into pf and so close the loop (or at least reduce the "sloppiness level") in pf?

Just an idea - I have no idea what I'm talking about :-)

Comments

By Ryan McBride (210.138.60.53) mcbride@openbsd.org on

On the subject of the need to employ sloppy state tracking, would it be possible for the servers to pass back the full reply direct to the client, and at the same time pass back partial replies back to the load balancer

This would be possible, but it requires modification to the web server code, which may or may not be possible or desireable. Also, now the web server would have to send up to double the number of packets, and in many cases it's packet count and not packet size that determines the performance cost.

Comments

By Rich (195.212.199.56) on

Would it require modification of the web server code? Couldn't the split be made by the pf running on each of the servers? That way, it would be completely transparent to the application (web server).

Comments

By Ryan McBride (210.138.60.53) mcbride@openbsd.org on

> > This would be possible, but it requires modification to the web server code,

Sorry, I should have said web server OS. Which unfortunately may not even be OpenBSD or even Unix.

Actually, if the webservers are running OpenBSD, there arguably little benefit to the extra checks that all this work would provide. Just run the load balancer in DSR mode, and run PF on each firewall.

Would it require modification of the web server code? Couldn't the split be made by the pf running on each of the servers? That way, it would be completely transparent to the application (web server).

Adding the overhead of communicating back to the load balancer counteracts the whole point of doing DSR, which is for the load balancer to get out of the way of the webservers as much as possible.

By henning (24.68.226.27) on

>

> On the subject of the need to employ sloppy state tracking, would it be possible for the servers to pass back the full reply direct to the client, and at the same time pass back partial replies back to the load balancer (just packet header information maybe - that sort of thing), and then the load balancer could maybe plug this partial reply back into pf and so close the loop (or at least reduce the "sloppiness level") in pf?

that completely nils the reason for DSR. as in, it would perform almost the same as going the nat way.